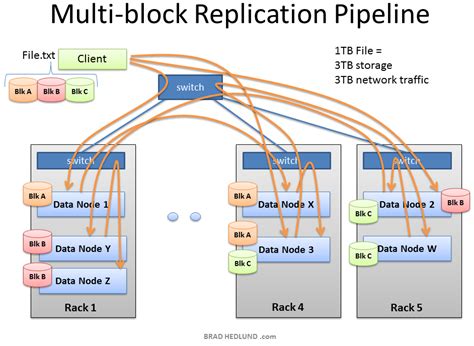

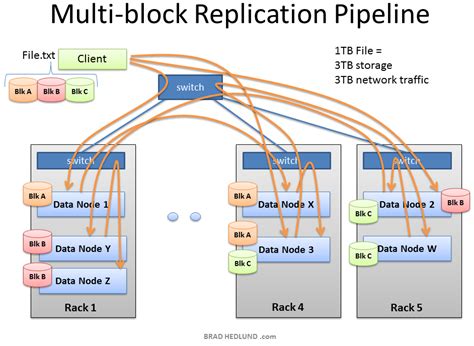

hdfs replication factor - hadoop file block replication factor : 2024-11-01 hdfs replication factor Replication of blocks. HDFS is a reliable storage component of Hadoop. This is because every block stored in the filesystem is replicated on different Data Nodes in the cluster. This makes HDFS fault-tolerant. .

hdfs replication factorApply today! 9 Las Vegas Courier jobs available in Nevada on Indeed.com. Apply to Dental Assistant, X-ray Technician, Concierge and more!

Answered: Does the Cosmo have a Platinum lounge? : Get Las Vegas travel advice on Tripadvisor's Las Vegas travel forum.Find out about upcoming Las Vegas concerts, shows and nightlife at The Cosmopolitan. The Pool District. Overlooking the heart of The Las Vegas Strip, The Cosmopolitan’s district of three distinct pool experiences lets you choose the scene that best suits your mood. Dive In. Marquee Nightclub & Dayclub is the ultimate Las Vegas .

hdfs replication factor Replication of blocks. HDFS is a reliable storage component of Hadoop. This is because every block stored in the filesystem is replicated on different Data Nodes in the cluster. This makes HDFS fault-tolerant. .Hadoop HDFS is mainly designed for batch processing rather than interactive use by users. The force is on high throughput of data access rather than low latency of data access. It focuses on how to retrieve data .hdfs replication factorThe replication factor is a property that can be set in the HDFS configuration file that will allow you to adjust the global replication factor for the entire cluster. For each block . There is no reason that the replication factor must be 3, that is the default that hadoop comes with. You can set the replication level individually for each file in HDFS. .Replication factor > 3. If the replication factor is greater than 3, the placement of the 4th and following replicas are determined randomly while keeping the number of replicas per rack below the upper limit (which is .

The replication factor is the number of copies to be created for blocks of a file in HDFS architecture. If the replication factor is 3, then three copies of a block get stored on different DataNodes. So if one DataNode .

The new replication factor will only apply to new files, as replication factor is not a HDFS-wide setting but a per-file attribute. Which means old file blocks are replicated 5 times and the new file blocks (after restart) are replicated 3 times. Its the invert of this. Existing files with replication factor set to 3 will continue to carry 3 .Let’s understand the HDFS replication. Each block has multiple copies in HDFS. A big file gets split into multiple blocks and each block gets stored to 3 different data nodes. The default replication factor is 3. Please note that no two copies will be on the same data node. Generally, first two copies will be on the same rack and the third .

hdfs replication factor Is it possible to set the replication factor for specific directory in HDFS to be one that is different from the default replication factor? This should set the existing files’ replication factors but also new files created in the specific directory.

CR CREAT - Updated May 2024 - 38 Photos & 22 Reviews - 3327 S Las Vegas Blvd, Las Vegas, Nevada - Coffee & Tea - Restaurant Reviews - Phone Number - Yelp.

hdfs replication factor